Re-presenting visual content for blind people

A thesis submitted to the University of Manchester for the degree of Doctor of Philosophy in the Faculty of Humanities

Alasdair Robin King, 2006, School of Informatics

Abstract

This thesis addresses how blind people access information sources designed for sighted people, and specifically the process of accessing arbitrary documents through automated processes independent of any intervention by a sighted person.

A web browser and a technical diagram access tool were developed to re-present web pages and diagrams for blind people:

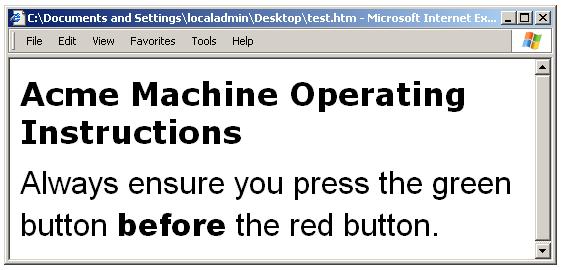

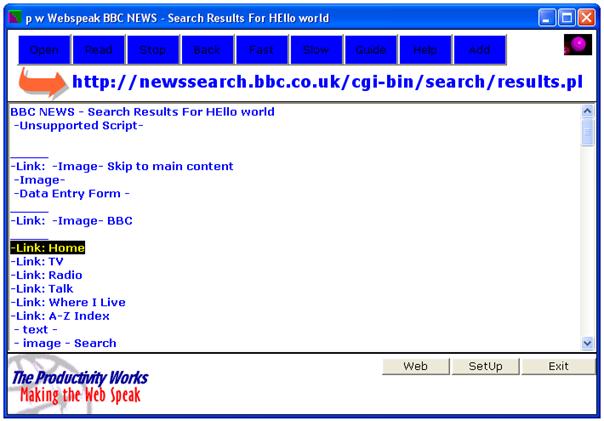

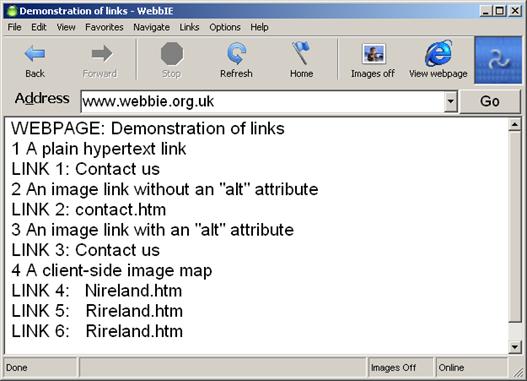

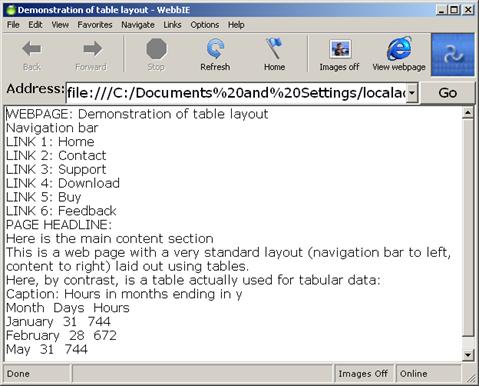

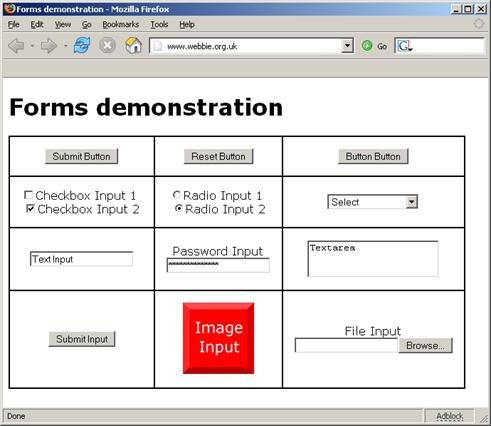

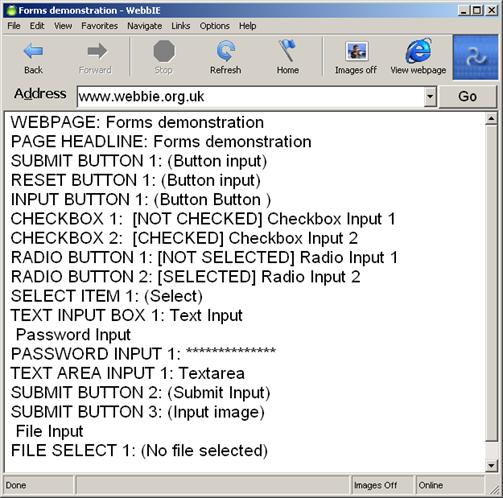

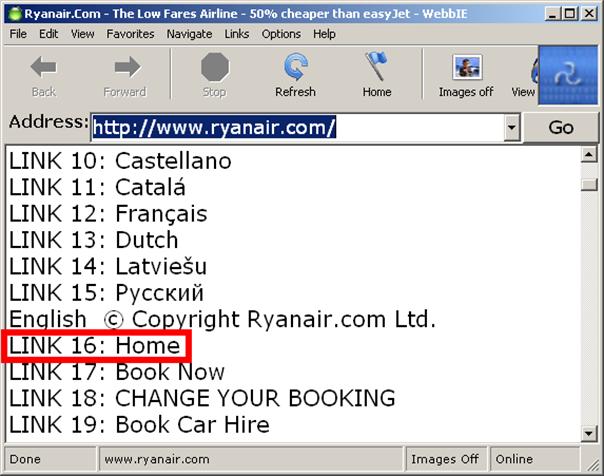

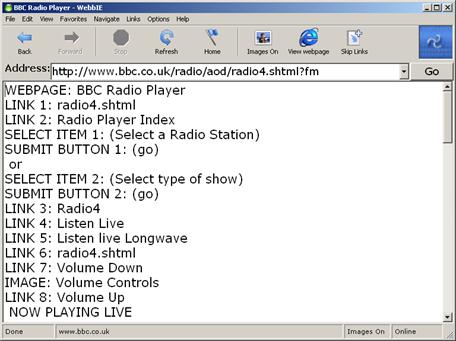

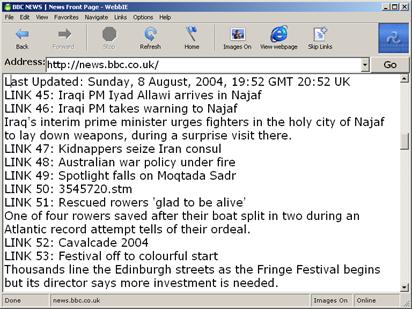

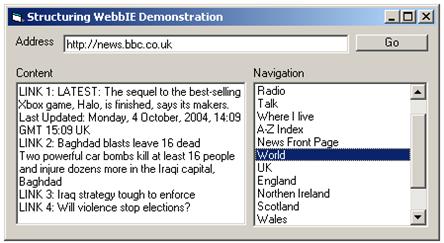

- The web browser presented web pages in a linear text format that permitted blind users to utilise their familiar screen reader. Evaluation confirmed that measured against other assistive technology it was successful in allowing blind users to access the majority of web pages, including those using forms, frames and JavaScript.

- The technical diagram access tool re-presented technical diagrams, including electronic circuit and UML software engineering diagrams, as a set of nodes organised into both a hierarchy and connected graph. Evaluation of the access tool indicated that users were able to access technical diagrams through the tool and successfully complete tasks.

The two applications handled spatial and layout information differently:

- No layout information was provided in the web browser. This did not detract from its use. A simple heuristic function allowed users to identify the content of interest on a web page.

- The diagram reader presented layout and spatial information with audio and haptic interfaces, but this re-presentation did not generally assist in the successful use of diagrams. Dedicated functions that supported user goals and tasks were most successful.

The thesis concludes that for web pages and technical diagrams their layout and spatial information need not be presented to blind people. Re-presentation is more successful when it supports user goals and tasks, if necessary by analysing layout and spatial information and explicitly communicating to blind people what sighted people can infer from it.

Declaration

No portion of the work referred to in the thesis has been submitted in support of an application for another degree or qualification of this or any other University, or other institution of learning.

Acknowledgements

My thanks to everyone who has helped me, including:

- Paul Blenkhorn for his inspiring ideas and encouragement.

- Sijo Dijkstra of FNB Netherlands and the TeDUB project user groups for performing the user testing.

- Roger Wilson-Hinds for his great support and advice on WebbIE.

- The citizenry of the European Union for funding my employment.

- My wife, Jo Taylor.

- Dave Stevens and other directors at Claro Software for their support.

Above all, my most sincere gratitude goes to Gareth Evans, without whom this thesis would not have been completed.

Copyright

Copyright in text of this thesis rests with the author. Copies (by any process) either in full, or of extracts, may be made only in accordance with instructions given by the author and lodged in the John Rylands University Library of Manchester. Details may be obtained from the Librarian. This page must form part of any such copies made. Further copies (by any process) of copies made in accordance with such instructions may not be made without the permission (in writing) of the author.

The ownership of any intellectual property rights which may be described in this thesis is vested in The University of Manchester, subject to any prior agreement to the contrary, and may not be made available for use by third parties without the written permission of the University, which will prescribe the terms and conditions of any such agreement.

Further information on the conditions under which disclosures and exploitation may take place is available from the Head of School of Informatics.

1. Introduction

Sighted people enjoy access to many visual sources of information, such as newspapers, web pages, sheet music, circuit diagrams and business charts. Until recently much of this information was available only through printed paper media. These are entirely inaccessible to blind people. Instead of print, blind people use touch (haptic) or hearing (audio) equivalents. Braille is one of the oldest haptic technologies with around 13,000 users in 1986 in the United Kingdom (Bruce et al. 1991). Tape recordings and latterly computer-generated synthesised speech are more recent audio technologies and are now more widely-used than Braille.

The problem is that the vast majority (95%) of books, newspapers and magazines are never made available in an accessible format (Mann et al. 2003) and there are often significant delays before the accessible format becomes available. Production of an accessible format has traditionally required a sighted intermediary to transcribe the document, so blind people had limited control over what they could obtain.

A more recent development is the availability of information sources using electronic instead of print media, including electronic books, diagrams as electronic files and web pages. Blind people have also acquired computers and the assistive technology to use them. Blind people can use their computers to access some of the electronic formats. This process, computer-mediated transcription, has three major advantages for blind people. First, they can access information that was previously not available in an accessible format. Second, they can access it immediately (e.g. web pages) or with less delay (e.g. obtaining electronic versions of books directly from publishers rather than waiting for an audio or Braille version to be produced). Third, their access to information is now independent of sighted people. Bauwens et al. (1994) describes the process of computer-mediated transcription for publishing. A more prosaic example is that instead of waiting for an audio version of the London Times to be recorded and posted, a blind person can now access the newspaper’s website at any time and read the contents. Berry (1999) describes how blind people volunteer this as “empowering”.

These advantages apply only to computer-mediated transcription and therefore disappear if blind people are forced to rely on sighted intermediaries at any point in the process. Computer-mediated transcription must therefore be automated and it must be able to handle arbitrary documents, not documents pre-prepared by a sighted person by annotation or editing. This constrains what information sources are suitable:

1. The input format must be amenable to transcription by machine. For example, web pages are composed largely of structured text and can be transcribed relatively simply by obtaining this text and determining its structure. In contrast, automated transcription of a raster image file of a photograph into a meaningful text description (e.g. “this is a picture of a horse”) is currently impossible.

2. The information obtained in transcription must be communicated to a blind user in a way that they can use. This is the problem of re-presenting the information. A document that can be transcribed by machine may still be difficult to re-present. For example, a book is relatively simple to re-present using speech and a set of navigation controls. In contrast, the problem of re-presenting mathematical equations to blind people is very challenging (Stevens and Edwards, 1994).

This thesis is concerned with this second problem of re-presentation. There are many new information sources that can be transcribed. There are many problems with re-presenting them. This thesis describes the re-presentation of two information sources by means of actual working tools:

1. Web pages. These are hypertext documents available over the Internet, and so amenable to computer-mediated transcription.

2. Technical diagrams. These are also available in electronic formats. They may only be available as images (vector or raster) and different formats are more or less amenable to transcription. Re-presenting the outputs from the automated transcription of different formats is examined, including image analysis of bitmaps and conversion of a structured text format.

The problem of presenting structured text is relatively well-understood and addressed (e.g. by talking books (DAISY, 2004)) However, there remain problems with re-presenting visual content. This is the structure, content and meaning that is apparent to sighted people examining a document. For example, a newspaper page structures text into articles and headlines with layout and formatting. An electronic circuit diagram represents a circuit as a connected graph of elements laid out in a two-dimensional plane where grouping implies structure and lines indicate connectivity. Visual content is immediately obvious and available to a sighted person looking at the original document. Sighted people can interpret it into meaningful information with ease. Visual content can serve three functions:

1. It may be used to structure the document, as with text structured in a newspaper.

2. Visual content may structure information so that it lends itself to problem-solving of particular problems by sighted people (Bennett and Edwards, 1998). For example, an electronic circuit diagram may lend itself to solving certain problems by laying out components to make certain relationships immediately apparent to a sighted user.

3. Visual content can be content in and of itself. For example, in an architectural floor-plan diagram the visual content (e.g. shape, location and orientation) is the information content itself.

All of these functions of visual content are available to sighted people. Blind people must rely on the re-presentation of the information, which may or may not include this visual content. This gives rise to two problems:

1. The function of the visual content may be implied in the final document but not explicitly present in the electronic source. For example, web designers often code structure into the final visual appearance of a web page (e.g. make a heading centred, bold, and a larger font), not the structure of the code itself (e.g. mark-up heading content with a dedicated heading code element) making it difficult to use a web page: users have to locate an article of interest amongst all the advertisements. There are two solutions: either the function must be inferred from the visual content itself and presented to the user (e.g. identifying the headings by analysis of appearance and alerting the user) or the visual content must be communicated to the blind user directly and they must take responsibility for drawing the necessary inferences (e.g. indicating the appearance of text and leaving the user to identify the heading).

2. Communicating visual content is itself a challenging problem, with pragmatic complications (finding a usable user interface based on haptic or audio) and theoretical ones (whether blind people are actually able to use visual content presented to them). The visual content can be elided from the final re-presentation of a document, but this means that blind people would lose its structuring, content or support for problem-solving.

This thesis examines these two problems through the development of the web page and technical diagram re-presentation tools, evaluates the tools’ effectiveness, and draws general conclusions about the re-presentation of the visual content of these information sources. The motivation is to improve access for blind people to some of these sources by improving their re-presentation.

Chapter 2 classifies different information sources into types and uses this classification to identify approaches for the re-presentation of information sources according to their type. Chapters 3 and 4 describe the re-presentation tools (for technical diagrams and web pages, respectively). Chapter 5 returns to the general problem of re-presentation, attempts to draw conclusions about how to handle visual content, and identifies future work.

1.1. A note on Braille and speech

Reference to Braille use is made in the thesis, and is obviously a very important issue for Braille users. Braille users access text through a Braille line or display, which is a form of reading (in that they can scan back and forth along the line and employ other techniques including layout) rather than listening (using text-to-speech). Braille use has many important implications for re-presentation, including user interface design, the communication of layout information and how users can interact with supplementary audio output. However, Braille use is low and declining, although it is higher in the skilled and technical groups who might be expected to be the natural users of some of the tools described in this thesis (Bruce et al., 1999). Text-to-speech technology is more widely available, less expensive and increasingly the norm. In addition, Braille use is such an important and specialised topic that it would warrant its own thesis. For these reasons, this thesis focuses on blind people who use a screen reader to produce speech rather than driving a Braille display.

2. Visual content and blind people

There are many different information sources available to sighted people, such as printed text, web pages, and technical diagrams. Each type has its own conventions and representational techniques. All use visual formatting and appearance to structure information and provide semantics [1], and some provide explicitly spatial information with graphics and layout, such as floor-plans. This has important implications for the re-presentation of these information sources for blind people.

Section 2.1 outlines sight and the role of visual content in different information sources. Section 2.2 describes a classification of information sources based on these different roles. Section 2.3 describes how each class of information source might be re-presented to blind people. Section 2.4 draws this together to describe a model for re-presenting visual content and the information sources described. This will be evaluated in Chapters 3 and 4 by the development of tools based on this approach.

2.1.Sight and how information sources work

Sight is very powerful. Because of its power it shapes how sighted people record information. Sight has characteristics such as the aggregation of similar content by appearance (such as colour or shape), differentiation of content into discrete groups by locality, gestalt recognition (the ability to “see instantly” meaning and implied information), and saccading, moving the point of attention freely and rapidly across the information source in search of content of interest or to facilitate a process of understanding and inference from the document (Solso, 1995; Wickens, 1992). These characteristics give sighted users an innate ability to identify patterns, rapidly find information of interest and relevance, infer relationships and perform other problem-solving and information-retrieval tasks.

Information sources contain content which can be broken down into two types:

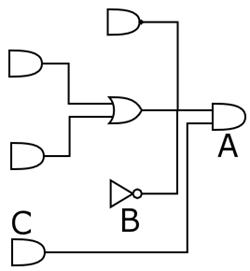

· Symbolic content is information that is conveyed by means of characters and other symbols. Paragraphs or text labels are examples of symbolic content. Symbolic content also includes defined graphical signifiers for which a text replacement is informationally-equivalent: this is common in technical diagrams, where (for example) an OR Gate in a digital electronic circuit is consistently represented by a defined signifier.

· Visual content is information that is not conveyed through symbolic content but through visual appearance, such as layout, shape, orientation, and position. It is used to structure symbolic content (e.g. splitting a newspaper page into stories with headings), to provide semantics (e.g. emphasis, as in “I am really unhappy”) and can also be content in and of itself where it directly presents spatial information (e.g. a scale drawing of a floor-plan).

Blind people can access the symbolic content of an information source through speech in a straightforward manner. Text and the text equivalent of any signifiers can be read out. The re-presentation of symbolic content by speech is linear and sentential: words follow each other in a single sequence in time. By contrast, blind people must rely on some spatialised audio or haptic interface to access visual content, because visual content is non-linear and not sentential. For example, the arrangement of components in a circuit diagram has spatial, proximal and positional information in addition to the symbolic content of the components themselves. There is no direct and obvious sequence for the components in which they should be presented in speech. Some re-presentation approach is required [2].

Visual content and problem-solving

Visual content has a role beyond recording information. Larkin and Simon (1987) observe that two-dimensional presentations of information allow the information to be indexed by a two dimensional location. Larkin and Simon’s work, and later work by Koedinger (1992), Kulpa (1994) and Barkowsky (2001, 2003) is based upon the reasoning that these two-dimensional presentations, or diagrams, are effective for sighted people because diagrams are more computationally efficient than sentential representations with the same information content as a result of the localisation of information in the diagram. They regard the visual representation of information as a tool for a process of problem-solving and state that diagrams are therefore more efficient. Zhang and Norman (1994) expand this approach to two-dimensional visual representations in general, finding that different representations have an important influence on the problem-solving process users chose: the visual presentation of a problem can change the way that sighted people approach solving it and their success or failure. For example, Green and Petre (1996) examine the effects of different representations of programming problems in different software engineering approaches and identify different effects on problem-solving.

Bennett and Edwards (1998) took this work and applied it to the problem of diagram use by blind people. They observed that diagrams are not always more effective for sighted people, as demonstrated by Green and Petre’s study (1992) of visual programming languages. This found that the symbolic presentation of code was more effective for solving some problems. There is therefore no automatic superiority of visual presentations over symbolic ones. However, Bennett and Edwards argued that diagrammatic representations are effective when they allow problems to be solved more easily than sentential representations of the same information. More formally, two representations with informational equivalence may have different computational efficiency for a given set of user tasks. Diagrams help sighted users to perform certain tasks easily, and Bennett and Edwards suggest that communicating this spatial layout to blind users was necessary because only when they experienced a similar profile of computational efficiency would they be able to use the diagrams effectively. If a diagram favours a task for a sighted user, then it must also favour that task for a blind user.

Blind people and spatial layout

Visual content takes many components, but the most important in the studies reported above and in the information sources to be examined is spatial layout. If blind people cannot use spatial layout then there is no purpose in presenting it to them. There is considerable evidence to support the proposition that blind people have sufficient spatial ability to use spatial information, although they have to employ deliberate strategies to do so where sighted people might do it without conscious effort. This evidence is largely derived from the investigation of navigation and maps. Ramsay and Petrie (2000) describe how movement and relative positioning could be communicated successfully to blind people. Ungar et al. (1996) found that blind children can navigate with maps they can feel using landmarks. Later work (Ungar et al. 1997) indicated that blind children can also judge distances from maps, though not as well as sighted children. Millar (1994) in detailed studies of blind children supported the position that blind people have the ability to make use of spatial information. It does appear that spatial layout can be made use of by blind people, although Kitchin and Jacobson (1997) criticise the validity of previous investigations into geographic space.

2.2.Information sources that might be presented

The huge range of information sources necessitates some classification of what is to be addressed in this thesis. A complete taxonomy of diagram types is a philosophical problem and a daunting task: an attempt is made in Massironi (2002), but for this thesis information sources are divided into three types: textual, diagrammatic, and pictorial. These are descriptive titles, not prescriptive statements about content, but the three types have different characteristics that necessitate different approaches.

This classification is not exhaustive, and does not include several important types of document, such as musical notation and mathematics. This is a pragmatic decision to exclude information sources that have their own, specific problems for translation into an accessible format (e.g. e.g. McCann 2001 for music; Gardner et al. 1998 for maths)

2.2.1. Textual information sources

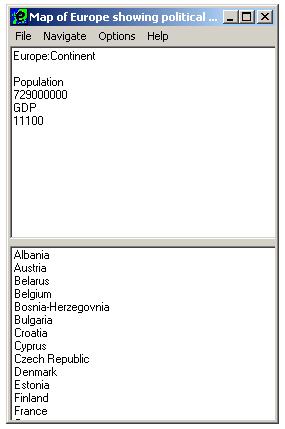

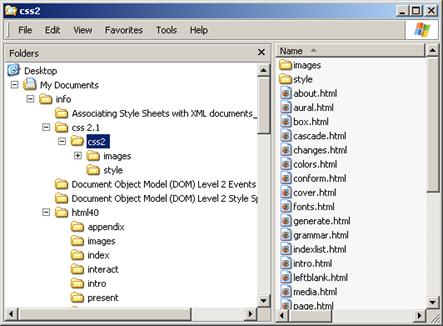

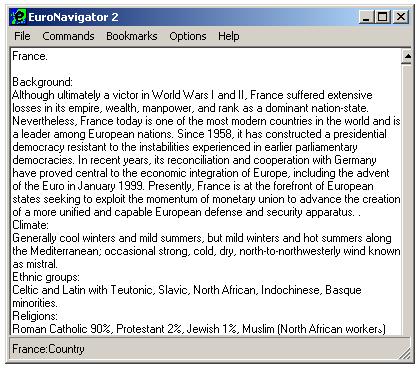

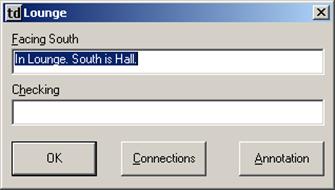

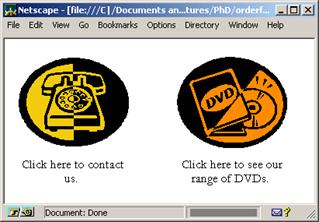

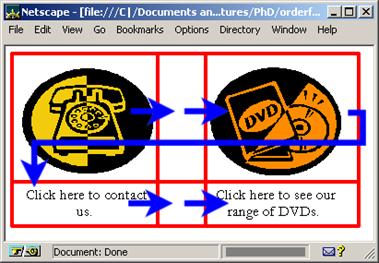

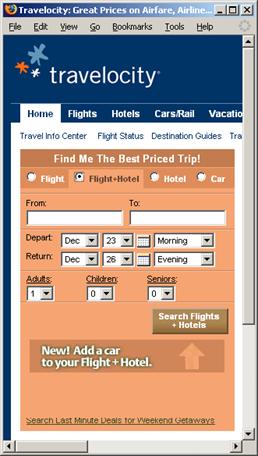

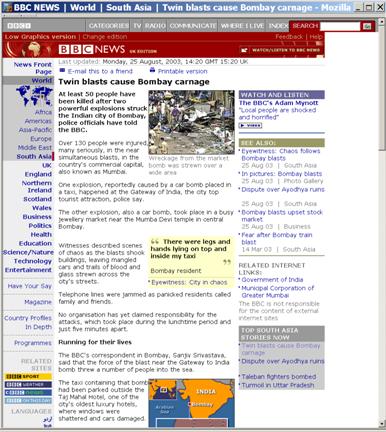

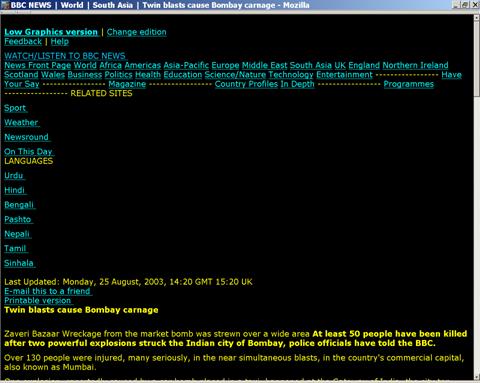

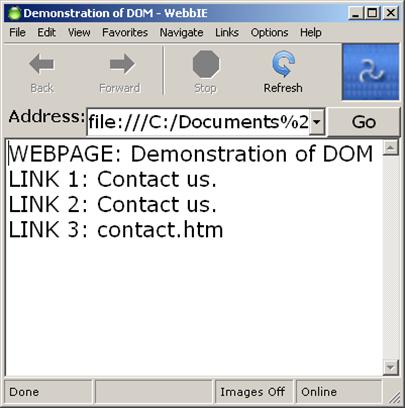

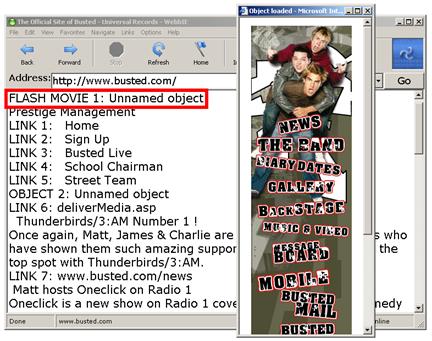

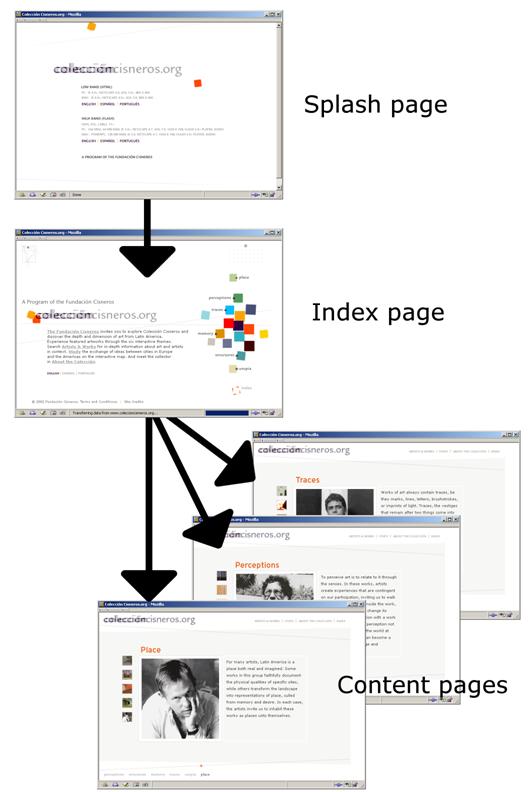

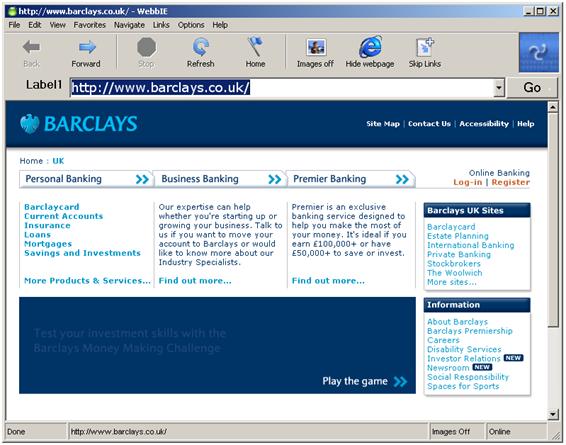

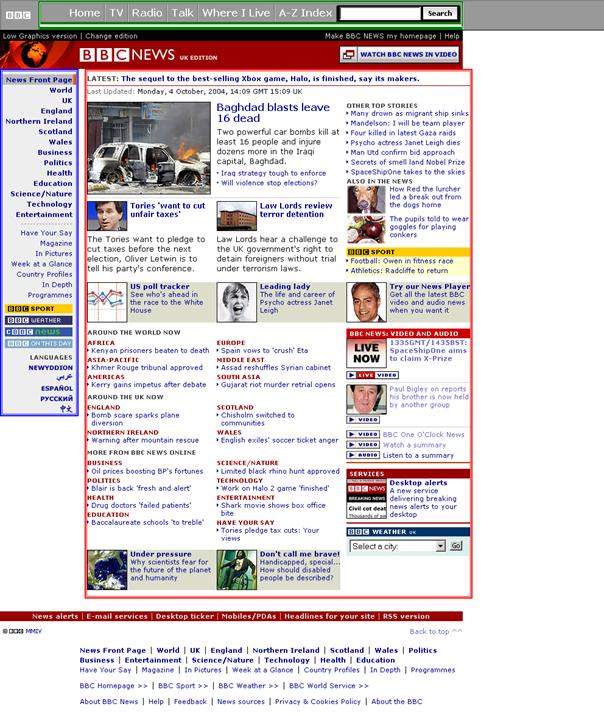

The first information source type is textual. Textual information sources are composed mainly of linear and sequential symbolic information. Examples include newspapers, books and web pages (Figure 1 and Figure 2). Visual content is used to convey structure, for example paragraphs and subsections in books, and semantics, for example identifying newspaper headlines by their appearance. Some restructuring and representation is often desirable to facilitate use of the information sources by blind people, for example allowing a user to browse newspaper article headlines before accessing article contents.

Figure 1: a textual information source, a newspaper. From http://www.kaleo.info/. It contains images for illustrative purposes: they are not generally necessary to understand the document.

Figure 2: A textual information source, a web page (from http://www.guardian.co.uk).

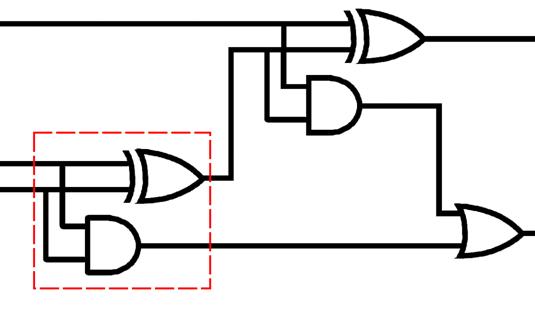

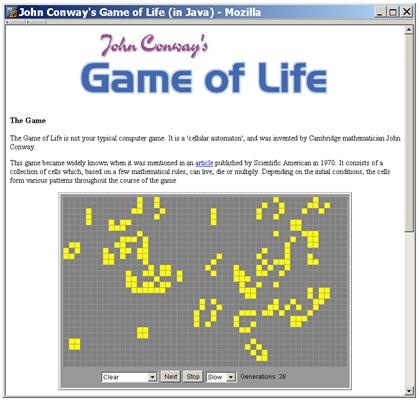

2.2.2. Diagrammatic information sources

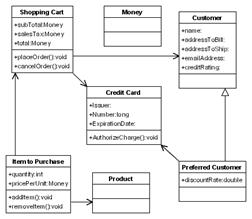

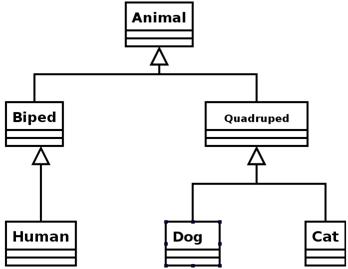

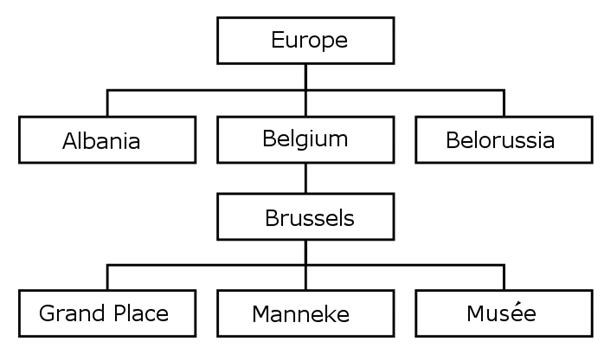

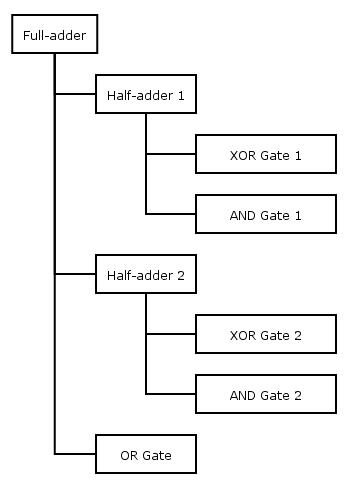

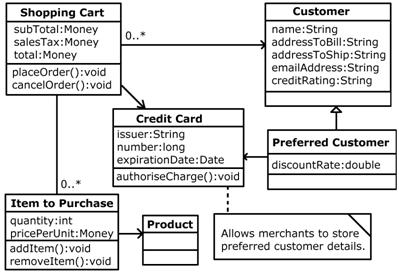

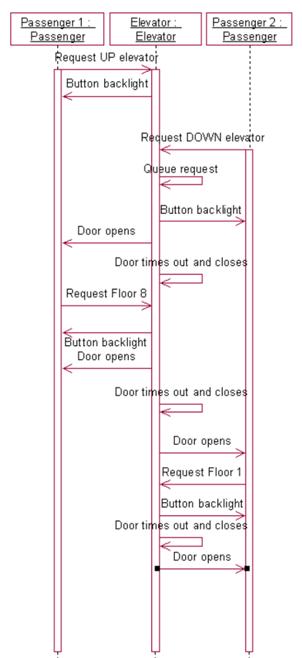

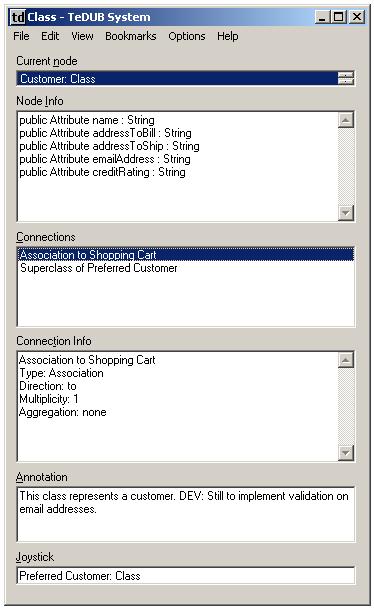

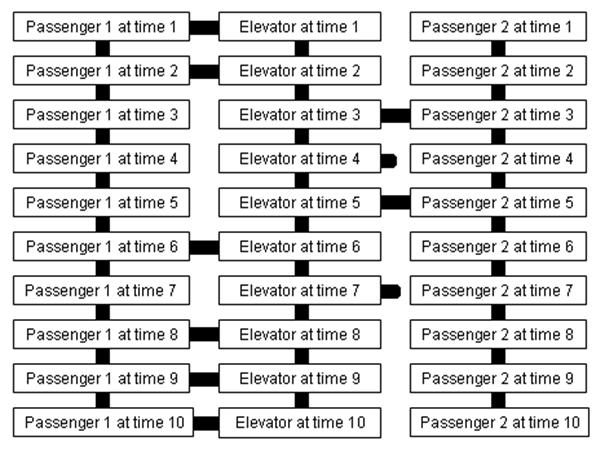

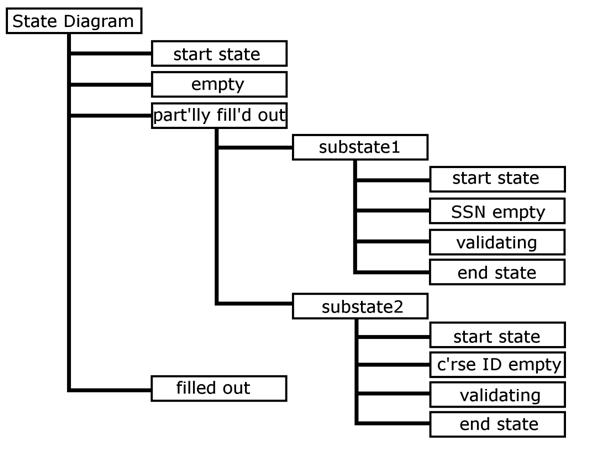

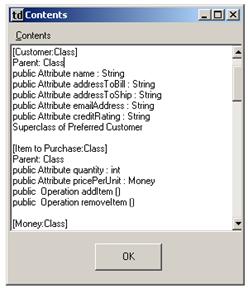

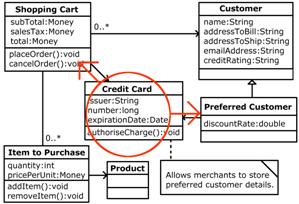

The second information source type is diagrammatic. This type includes electronic circuit diagrams and software engineering diagrams such as Data-Flow Diagrams and Unified Modelling Language (UML) diagrams (Figure 3). These are all examples of the diagrams defined by Simon and Larkin (1987). Diagram information sources are composed of symbolic content located in a two-dimensional plane, where connectivity is important and meaningful but spatial position, either absolute or relative, does not generally have any explicit or implicit meaning. For example, Figure 3 shows the same diagram with two different layouts: however, the information content is the same. Diagrammatic information sources have a standard grammar and vocabulary of diagram components, differing between domains, so any symbolic content can be translated into text with no ambiguity. All of the information in the content is therefore amenable to translation into text, which can then be communicated to the blind user. The symbolic content in diagrammatic information sources is generally constrained to nodes in a connected graph. The edges between nodes represent relationships between elements in the diagram. The spatial layout presents the contents clearly for sighted people (e.g. nodes should not overlap; edges should be clearly distinguishable from each other).

Spatial relationships embodied in these diagrams can be hierarchical: sets of nodes can be regarded as single entities at a higher level of abstraction. There are conventions for representing this, usually implicitly by grouping the nodes close to each other and away from other nodes.

Figure 3: Two representations of a diagrammatic information source, a UML diagram (from OMG 2004). The different layouts do not change the information content.

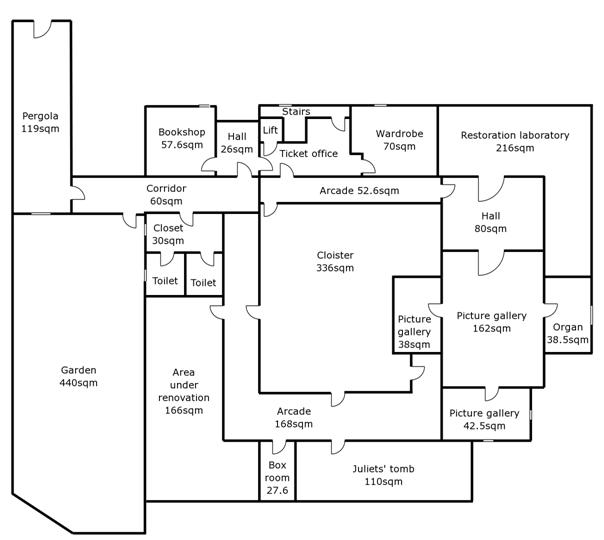

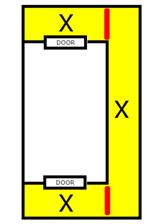

2.2.3. Pictorial information sources

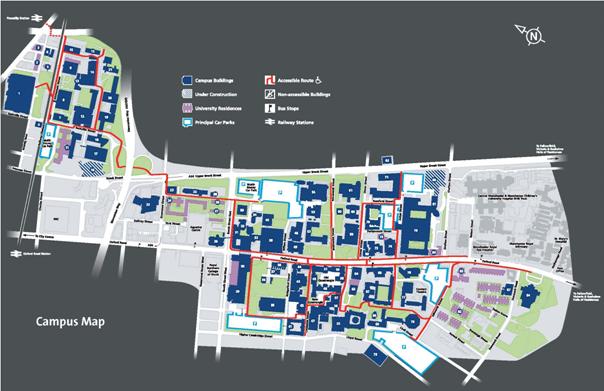

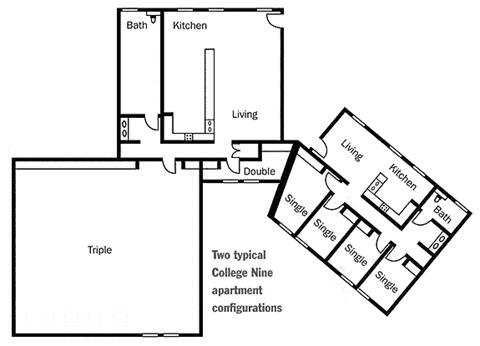

The third and final information source type is pictorial. Pictures utilise pictorial, graphical and text elements composed in a two-dimensional plane, just like diagrams. However, in pictorial information sources, visual content does not structure or supply semantics but is itself content, and it is not convertible into text like graphical symbols in technical diagrams. Examples include maps and architectural floor-plans (Figure 4 and Figure 5). In diagrams connectivity is important but not layout. In contrast, in pictorial information sources layout, shape and position are all important.

Figure 4: a pictorial information source: a map. From http://estatesoffice.man.ac.uk

Figure 5: a pictorial information source: a floor-plan. From http://collegenine.ucsc.edu/apartments.shtml.

Figure 6: The floor-plan from Figure 5 with the spatial layout altered. Unlike the change in layout shown in Figure 3, this does change the information content of the information source.

2.3.Re-presenting the different information sources

Blind people have to have access to re-presentations that support problem-solving and particular tasks. This support is provided by visual content, notably spatial layout. Each class of information source uses spatial layout differently. The different tasks and roles for spatial layout mean that the different information sources must be re-presented in different ways.

2.3.1. Re-presenting textual information sources

Textual information sources use visual content for structuring the information and applying semantics to symbolic content. Re-presenting textual information sources is therefore relatively straightforward. Bodies of text can be communicated through speech. It is customary to provide some simple navigation, such as chapter and section for books, or division into stories and sections for newspapers. These are provided by simple hierarchies and linear lists of headings or indices. This is intended to improve comprehension (e.g. Truillet et al., 1998). For example, audio cassette recordings simply feature recordings of the source spoken by a person and state the headlines or chapter information. Different sections are indicated by periods of silence or by mapping the document to the physical tapes (e.g. one chapter per tape for an audio book) [3]. The DAISY system (DAISY, 2004) provides documents as multimedia Digital Talking Books (DTB). Each DTB contains some combination of audio recordings and text equivalents. The text equivalents can consist of anything from a simple title (to allow the book to be identified) to a table of contents or index, to a complete transcript of the audio recordings. DTBs can even consist only of electronic text, allowing the DTB content to be accessed by text-to-speech or Braille or through playing the audio recording. Synchronised text and audio allows users to search the text and hear the results in audio and the structure provided by the text allows the user to navigate around the DTB.

Publishers have established routes for distribution of their content in these accessible formats to a blind readership. These have traditionally involved the distribution of audio tapes or Braille. Modern technology has permitted new distribution methods. Bormans and Engelen (1998) describe how the Flemish newspaper De Standaard is delivered to readers daily by floppy disk or email as a structured document read by special reader software. The Talking Newspaper Association of the United Kingdom (TNAUK 2004) provides a similar service. Despite these developments, most printed information is not available to blind people through Braille or audio. The RNIB estimates that 95% of published material in the UK is never produced in an accessible format (Mann et al. 2003). What is available may not be available in every accessible format (Lockyer et al. 2004). The prospect of access to print information via electronic distribution over the Internet of print documents in their original electronic forms is an exciting one, although one beset by economic and legal issues (Music Publishers Association 2001, Mann et al. 2003).

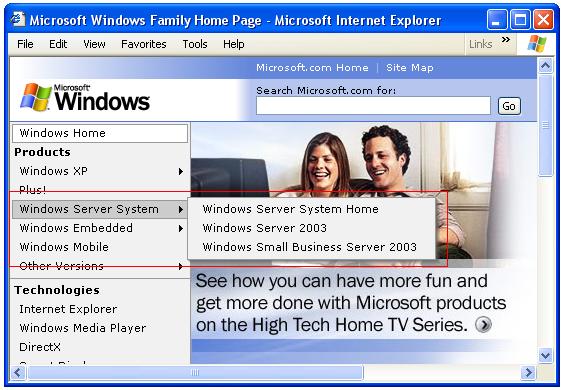

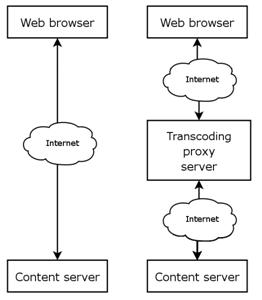

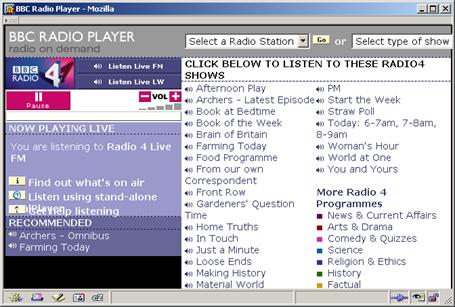

There is one textual information source that is widely available and potentially accessible: web pages on the World-Wide-Web. Access to billions of web pages is a great opportunity for blind people. However, there are numerous complications. The subject of access to the Web by blind people is covered in depth in Chapter 4. However, it is important to note that although each individual web page is a textual information source the Web as a whole is not. The Web consists of a corpus of documents which must be navigated and searched to identify documents of interest to the user. This is analogous to files in a file system, nodes in a graph or books in a library. Users of such corpora often employ information foraging techniques, the process of finding a document of interest (e.g. the process of finding a web page with a recipe for chicken soup) described in Pirolli and Card (1999). Users typically examine many documents, assessing the usefulness or relevance of each before deciding whether to read and digest it or move on to try another document. Different techniques can be employed depending on such factors as how easy it is to obtain another document (very easy on the Web), the ability of the user, and the nature of the information being sought. Supporting this behaviour is important for any system that presents textual information sources with multiple documents. However, it is not important to provide a spatial representation of the Web when doing so, although it has been attempted (Chen and Czerwinski, 1998). Sighted users handle web navigation without an explicit spatial presentation, implying that blind people will not be disadvantaged by a lack of such a presentation. [4]

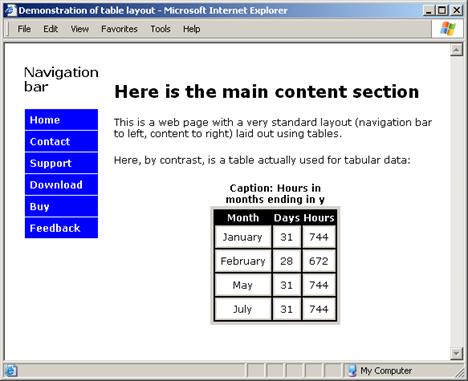

The structure and semantics of a textual information source may have to be determined from the visual content if it is not available in the textual information source itself. For example, web pages should explicitly identify headings that define the page’s structure, but if headings have not been provided then the structure of the page may have to be extracted or inferred from the visual content of the web page. However, this is the extent that the visual content influences the basic process of extracting and re-presenting the text content. There is no need to provide the visual content itself to blind people because it does not itself provide any information. For example, in Figure 1 the position of the three articles on the page is not material. The fact that there are three articles, and the headlines and body text, is material and useful. This structure is obtained from visual examination of the newspaper, but could easily be presented without any visual content to a blind user, e.g. “Kaleo newspaper. Three articles. Article 1, Cement strike, weather slow La’ie projects. Article 2, Kahuhu Hospital update…”

2.3.2. Re-presenting diagrammatic information sources

Diagrammatic information sources have an additional consideration: the visual content of the information source, in this case spatial layout. This may help sighted users to problem-solve: it is not explicitly part of the information content of the diagram in the way that connectivity information is. There are three ways to handle the spatial layout:

1. Remove the spatial layout. The first approach is to ignore spatial layout. For example, the UML diagram shown in Figure 3 might be re-presented as a set of hypertext pages with the connections forming links between them. The location of each node in the connected graph need not be communicated to the user. Only the connectivity information needs to be provided. The information source can be communicated in an accessible format, probably a structured text presentation. This means that any benefit that sighted users gain from knowing the layout of the items in the diagram is unavailable to the blind user. Connectivity must still be presented but as lists of connected nodes. A system that took this approach and presented technical diagrams (software engineering diagrams) without attempting to present the spatial information of the original diagrams is described in Blenkhorn and Evans (1994). It was found to be effective in Petrie et al. (1996). The advantage of this approach is that it does not require any special audio or haptic interfaces and attempts to communicate the least information. This may be more effective for blind people who can ignore the spatial layout and get on with working with the diagram.

2. Communicate what the spatial layout means. The second approach is to take into account what the spatial layout means, if anything, and communicate this information rather than the spatial layout itself. For example, certain combinations of elements in electronic circuits create higher-level aggregate elements. These aggregated elements might be identified and communicated to the user, although the process of identification is likely to be difficult: Chapter 3 describes an attempt to do this for electronic circuit diagrams, where structure was communicated by a re-presentation of the elements in a compositional hierarchy. This involves presenting the user with more complex information than removing the spatial layout entirely, but does not have to involve special audio or haptic interfaces. It may of course be more efficient than removing the spatial content because the added structure may help the user to make sense of and use the diagram.

3. Present the spatial layout to the user. The third approach to handling the spatial layout is to communicate it to the user directly. This can be done in many ways, many of which require special equipment such as spatialised speech or sound or the use of force-feedback devices. The spatial layout can be communicated either absolutely, maintaining the position of elements in the re-presentation relative to their original positions within the printed area of the diagram, or relatively, maintaining the position of each element relative to each other but ignoring their absolute position. Absolute positioning provides a convenient frame of reference, but may require more information to be provided. In general the choice between absolute and relative will be made according to the requirements of the information source being re-presented and the interface developed. For example, an interface might provide spatial information by means of an absolute coordinate system. However, for diagrammatic information sources the absolute position is not important while the relative position of elements is important. Spatial layout is used to define and structure, not important in and of it: the positions of elements are important in that they relate to each other, not how they relate to the containing diagram. This is the solution most likely to present the user with a complex audio or haptic interface to convey the spatial information. This may be difficult and inefficient, so there must be a reason to attempt it.

Spatial layout: the implications for problem-solving

Spatial layout is used in diagrammatic information sources to perform basic structural and organisational roles. For example, white space is used to differentiate articles or newspapers, and proximity is used to indicate label attribution in diagrams. However, the spatial layout in a diagrammatic information source might also contribute to problem-solving by sighted people. For blind people to take advantage of the spatial layout they must be able to obtain and retain some of the spatial information provided by the diagram from the spatial re-presentation presented to them. For example, if the re-presentation involved learning the diagram layout through spatialised sound effects, they must be able to use these sound effects to build some kind of mental map. Having built a mental map, a blind person might be able to use it for problem-solving in a similar way to the mental map built by a sighted person.

Assuming that these two requirements can be met, the spatial layout for a diagram may be of use for a blind person. It follows that while building a mental map should not be required to use an information source (so a user should be able to retrieve a poem from of a book by text search without having to remember the chapter structure) the creation of a mental map should be facilitated.

Figure 7: A simple diagram (a UML Class diagram)

The three approaches to spatial layout in diagrams might each have different effects on the development of a mental map:

1. Removal. Ignoring the spatial layout in the diagram forces the blind user to construct a mental map in isolation from the spatial model represented by the visual presentation. The mental map will reflect how the diagram is presented to the blind user, not its original spatial layout. For example, if a diagram is presented as a list of elements, then the blind user’s mental model will be a list. This does not support the problem-solving attributes of the original diagram. For example, a re-presentation of the simple diagram in Figure 7 might simply provide the elements in an alphabetically-ordered list with connections between them noted: a sighted person, however, can clearly see that the diagram has a hierarchical structure, and that the Animal class is at the apex of it. A sighted user may more easily solve problems related to the relationship between the classes with this knowledge.

2. Communication of the meaning. Providing the meaning of the spatial layout of the diagram should create a mental map with informational equivalence. For example, Figure 7 might be re-presented as a hierarchical tree such as that found in Windows Explorer, with the Animals class at the top. If it is assumed that this mental map is the same as the mental map of the sighted user, then this may be an efficient re-presentation of the information. If, by obviating the need to build the map, this removes the process by which the map is developed, then the blind user loses any benefit. For example, if the benefits of recognising the hierarchical structure of Figure 7 are only obtained if the structure is committed to a mental map, then providing this structure without requiring the user to learn it is of no benefit.

3. Presentation of the spatial layout. For example, the user might be provided with the spatial layout of every class in Figure 7 through an audio or haptic interface. Communicating the actual spatial layout assumes that the blind user builds up their mental map in the same way as the sighted user. If this is true it may result in a better mental map than that inferred that may be more effective at problem-solving. This assumes that the layout of the diagram is designed for problem-solving: that the map that the blind user builds up is also problem-solving: and that the user is able to problem-solve with it.

The basis for presenting the spatial layout to the user is therefore the assumption that a spatial layout provides a better mental map and therefore understanding of the diagram and support for problem-solving than the simpler approaches of removing spatial layout or communicating its meaning. This will be examined in Chapter 3.

2.3.3. Pictorial information sources

Pictorial information can be presented directly by a tactile diagram. These are sheets of material embossed with raised lines, characters and textured areas which allow the user to feel the content and explore it by touch. Braille embossed on the material presents text. Tactile diagrams can maintain the absolute spatial and positional information content of the printed version. For example, if a floor-plan diagram depicts a room in a certain position and with a certain shape, this can be perceived directly by feeling the room on the tactile diagram [5]. A tactile diagram showing the Microsoft Windows ’95 operating system desktop is shown in Figure 8.

Figure 8: A tactile diagram, showing Braille used for text. From 'Windows 95 Explained: a Guide for Blind and Visually Impaired Users', by Sarah Morley 1997, published by the Royal National Institute of the Blind, UK. Used with permission.

Tactile diagrams have a long and successful history, having been produced by charities and organisations for Braille books and educational materials for many years (for example, Cote et al. 2004.) Metal and plastic diagrams are used for permanent diagrams. Paper has been used as it is cheaper and paper diagrams are easier to produce in large quantities, though it does not wear as well. A recent innovation has been the introduction of swell paper, a type of paper that when heated swells in size (National Centre for Tactile Diagrams, 2004). This can be printed on by a normal desktop printer in black ink, and when passed through a heating machine the black-printed areas absorb more heat and swell. This allows blind users to produce tactile diagrams independently of sighted intermediates quickly and easily. However, this assumes that a tactile diagram created without any sighted intervention will be usable, and it probably will not. Documents for sighted people contain a great deal of information: many objects, much text, lines and shapes in abundance. While sight can discern and resolve these easily, a straight tactile diagram of the same document is likely to be impossible to understand because tactile resolution is poorer than visual resolution (Sekuler and Blake, 1992). Non-Braille text from the original will almost certainly be unintelligible, too small and unclear, and graphics will be impossible to identify or resolve. For these reasons, tactile diagrams for books and reference materials have generally been specially redesigned and converted by sighted people according to guidelines developed over many years. Levi and Amick (1982) discuss the production of tactile diagrams. Experts in the field, they state that “figures cannot simply be magnified and copied verbatim (sic) as raised-line drawings. The process of translating a visual picture into a tactual representation, no matter how it is produced, requires interpretation”. They also state that not all diagrams are suitable for conversion into tactile diagrams and give production guidelines, for example: important parts of the diagram must be carefully delineated by being rough or highly embossed; all lines must be separated by 6mm lest they merge; shapes such as arrowheads are hard to recognize if they are less than 13mm square, and small triangles or shapes often go unrecognized; 6mm is needed for each Braille cell. These figures are for higher-resolution plastic, not paper. All these factors militate against an automated method for turning complex graphics into tactile diagrams without human intervention by simply printing them on swell paper. Aldrich and Sheppard (2001) provide more recent information on the use of tactile graphics in education, TAEVIS (2002) gives modern production guidelines, Berla (1982) adds information on the vital role of diagram producers and teachers, and Horsfall (1997) and Wild et al. (1997) give updates. All stress the vital role of good tactile diagram design and translation to create a usable tactile diagram. There is no automated process for automatically producing tactile diagrams directly from print diagrams.

Another approach is to use a tactile diagram in conjunction with a tactile tablet, a touch-sensitive panel on which the tactile diagram is laid. The tablet is connected to a computer that relates locations on the panel to locations in the diagram. The user can then explore the tactile representation of the diagram and have supplementary information, for example what text lies at the current position indicated by the user, presented by the computer. For example, if a user is exploring a map of Europe, they might press on the raised dot indicating Paris and hear a description of the city read out by the computer. This approach provides a reproduction of the original spatial and positional information but allows more information to be placed on the diagram than would be permitted by Braille on the paper. The first system of this type was the NOMAD system (Parkes, 1988), later developed into the Tactile Audio Graphics (TAGraphics) system (Parkes 1994, Parkes 1998). This is commercially available (TGD 2004). Further examples of this type of system in use include Fanstone (1995), who describes a system used to present a university campus map, the TACIS system described by Gallagher and Frasch (1998), the Talking Tactile Tablet (Touch Graphics, 2004) and the DAHNI interface developed in the ACCESS project (Petrie et al. 1997). However, all of these systems suffer from the same problem: they require sighted intervention and expertise to create truly useful diagrams. In the NOMAD-style systems, this might involve creating an electronic representation of the diagram rather than re-drawing the diagram, but the work is required all the same [6].

The Science Access Project described in Gardner and Bulatov (2001) illustrates a possible automated solution, which is to use not raster bitmap graphics, which require analysis and annotation by sighted people, but vector-based formats which can be rendered for sighted people but are also amenable to automated transformation. For example, the system described by Bulatov and Gardner is intended to use files in the Scalable Vector Graphic (SVG) format (Ferraiolo et al. 2003). SVG files can name graphical items in the diagram, and explicitly define their position and area. This means that it might be possible to transform the map automatically, for example mapping the diagram area on to the tactile tablet area and providing meaningful content for each point. However, it does rely on SVG authors to comply with the format’s accessibility features (McCathieNevile and Koivunen 2000). Since the annotation and structure that would make SVG files amenable to automated translation is not required for meaningful visual rendering it is probable that most files will be inaccessible: Chapter 4 describes the low rates of use of similar structural and accessibility features in HTML. The approach will only be productive if more proprietary data formats move into SVG-like formats and applications that generate SVG produce meaningful and accessible content. As it is, Bulatov and Gardner’s latest research appears to instead concentrate on the navigation around a bitmap image using a system that is predicated on the frequent and simple production of tactile diagrams and their careful exploration, rather than extraction of the content of the diagram and its re-presentation (Gardner and Bulatov, 2004). SVG was used by Campin et al. (2003) as the data format to store tactile diagrams (maps) but these files were not generated from original SVG sources obtained from sighted people but created by hand by sighted people with the intention of supplying them to blind people.

Alternatives to tactile diagrams

All of the interfaces described above assume the use of tactile diagrams or a tablet interface to allow users to directly access a representation of the diagram that is spatial, so there is an a priori justification for claiming that the visual content of the original diagram is preserved and that the blind user may build up a mental model of the diagram that is consistent with that of a sighted person. In contrast, other systems attempt to present the visual content through spatialised audio, haptic devices such as joysticks, or text descriptions, and these will work only if blind people can synthesise the results into a coherent mental model. If this can be accomplished then the problem of creating tactile diagrams that work is avoided, so it may be easier to create an automated system.

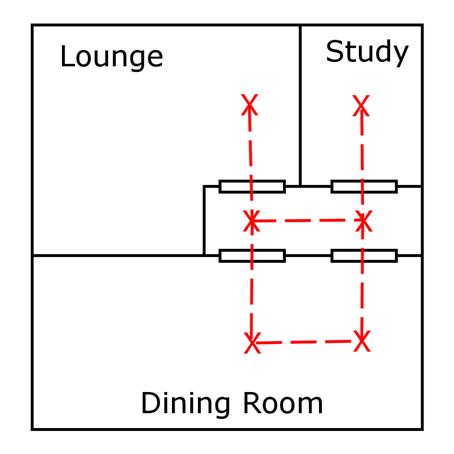

The challenge for such a system would be how to handle the visual content without tactile diagrams. Yu and Brewster (2002) describe one such system for presenting simple bar charts using a joystick and audio and evaluated it against tactile diagrams. The results suggested that the audio-haptic system was at least comparable to the tactile diagrams for this restricted and simple type of diagram. However, visual content plays a more important role in pictorial information sources that it does in diagrams, since it does not simply structure and assist problem-solving but also provides content in and of itself, such as layout and shape in a floor-plan. The difficulties of re-presenting such visual content to users suggests that no pictorial information source will be suitable for automated re-presentation, but that the task should be left to tactile diagrams. If, however, maps and floor-plans are compared, it will be observed that although they are both pictorial information sources they are quite different in the spatial information they contain. While maps generally consist of elements freely positioned in two dimensions, or unbounded, floor-plans are bounded: they generally consist of a set of restricted areas (rooms) with connections between them (doorways). Floor-plans therefore might be re-presented as a connected graph, just like a diagrammatic information source, where the rooms are nodes and the doorways edges. Figure 9 shows an attempt to depict the floor-plan from Figure 5 in this manner: the unnamed hallway has been split into two nodes to represent the shape and spatial layout of the floor-plan. The connectivity between rooms can be preserved. The studies on spatial information and blind people found that blind people used landmarks for navigation, which fits in with this model if each room is seen as a landmark. This is of course a great simplification of the content of an architectural diagram. Such a model would be useless at depicting shape and orientation, for example. However, there may be uses for such a system, such as gaining an understanding of the connections between rooms in an office or being able to structure a tour of a museum around the layout of rooms, and it can conceivably support a process of automated re-presentation without a sighted intermediary.

Figure 9: Part of the floor-plan from Figure 5 depicted as a graph of nodes.

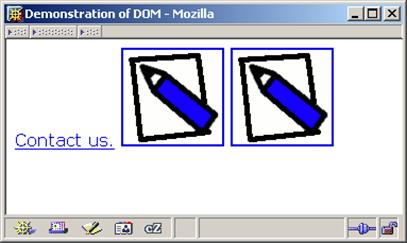

2.3.4. Lessons from re-presenting GUIs

Two approaches have been described that attempt to provide blind users with the benefits of visual content: communicating the structure and meaning provided by visual content or presenting the visual content as it stands. This has parallels with different approaches taken by screen readers in the past. The increased use of Graphical User Interfaces (GUIs) in the 1990s, replacing command-line-driven systems, led to fears that blind people would be excluded from using computers since they were unable to use the increasingly-common point-and-click windowed graphical environments [7]. One approach to this problem was to attempt to communicate the visual content of the environment – windows, buttons, controls, and their position on the screen. Schwerdtfeger (1991) describes several such systems, such as OutSpoken for the Apple Macintosh and ScreenReader/PM for the IBM-PC, which required the blind user to operate a mouse and read the GUI component at the current mouse position. The PC-Access system described by DuFresne et al. (1996) took a similar approach, arguing that blind people use spatial information in handling real objects – for example, ordering diskettes, placing things in certain positions and remembering these locations – and so user interfaces should be based on such positioning. Mynatt and Weber (1994) describe another such system, the GUIB system. However, they also contrast it with a system called ‘Mercator’ (Mynatt and Edwards, 1992). Mercator does not attempt to present the visual content, but re-presents the interface as a hierarchically-organised set of user interface components accesses solely thorough the keyboard and sound/Braille display. Edwards et al. (1995) provide a theoretical basis for this approach, describe how the GUI can be represented as a system of hierarchically-organised affordances, a task-based representation designed to support work with the system rather than describe how the system appears to a visual user. In fact, this re-presentation of the GUI as a set of components that can be navigated between with the keyboard and without regard to their actual spatial position is generally the approach taken by modern screen-readers [8]. Communicating the visual content was found to be less efficient than communicating what it implied (e.g. “disabled text box” rather than “wide grey text box”).

It is difficult to argue that one approach (re-presentation) has been successful, and one approach (presenting the original visual layout) unsuccessful, since this depends on so many factors (e.g. the cost of non-standard devices employed in the latter, such as tactile tablets and force-feedback mice, or the creation of a commercialised product from the research products). However, it is not unreasonable to observe the greater success of the re-presentation approach adopted by screen readers, and to suggest that this supports re-presenting the meaning of the visual content rather than presenting the visual content itself.

2.4.A model for re-presenting visual content

This section tries to create a model for re-presenting visual content, based on the research and technology described above, where the aim is to support automated processes of translation free of intervention by sighted people.

Visual content in information sources is used for different things, including providing structure and meaning and allowing sighted users to perform some tasks more efficiently (problem-solving). Studies indicate that blind people can build spatial mental maps, so communicating spatial layout might be of benefit to users if it allows them to build up these mental maps or supports problem-solving. The importance of visual content varies according to the class of information source.

Different information sources therefore require different approaches:

· Information sources that are primarily textual (e.g. web pages) should be re-presented as linear text. Structure and semantics should be inferred from the visual content and applied to the structure and content of the re-presentation.

· Information sources that are diagrammatic (e.g. electronic circuit diagrams) should be re-presented as a set of nodes. Any implicit information from the diagram (e.g. aggregation components) should be represented explicitly where possible. It is also reasonable to present the spatial layout of the diagram, with the goal of assisting blind users to build up a mental model and as a way to re-present the diagram content effectively.

· Pictorial information sources (e.g. maps) should be presented in a directly spatial format, using a tactile diagram. However, it is worth investigating whether modelling a bounded pictorial information source (e.g. a floor-plan) as a diagram (i.e. as a graph) is of benefit.

Chapter 3 describes an investigation into the re-presentation of a diagrammatic information source, technical diagrams, and a bounded pictorial information source, floor-plans. Chapter 4 discusses the re-presentation of a textual information source, web pages. Chapter 5 draws conclusions from both these investigations and relates their findings to this general position on the re-presentation of visual content to blind people.

3. A diagram access tool

A model for the presentation of diagrammatic information sources to blind people was described in Chapter 2. The principles are as follows:

· The information content of such sources is largely text, structured by layout and position.

· Graphical content has direct text equivalents.

· Re-presentation of the information source can be accomplished by communicating the text content and explicitly providing the structure implied in layout and position.

The spatial information in the diagram may still be of interest to a blind user, because the layout may assist in understanding the diagram or afford the resolution of particular tasks. However, there is a trade-off for the blind user because determining spatial position or layout is not as easy as for sighted people. Blind users should be presented with spatial information if the benefit of obtaining the spatial information is greater than the cost of the effort required. The benefit and cost will vary for different diagrammatic information sources and individual tasks.

This presentation model is investigated in this chapter for the domain of technical diagrams, such as electronic circuit diagrams or UML diagrams. Technical diagrams have a consistent and well-defined visual vocabulary. Depending on the diagram domain, layout and position may provide structure but is not part of the information content of the information source. Technical diagrams are therefore diagrammatic information sources as described in Chapter 2. Based on this, the assumption was made that communicating the spatial information in the diagrams would be of benefit to blind users. This was tested by a number of tools developed to present technical diagrams to blind people. The tools used a number of different user interface and information re-presentation approaches. The resulting diagram access tools were intended to both test the veracity or otherwise of the presentation model and to develop useful and practical re-presentation tools for the technical diagram types examined.

Section 3.1 outlines the basis for the TeDUB project that drove development of these diagram access tools and how it relates to this thesis. Section 3.2 describes the engineering approaches that can be employed. Section 3.3 describes the actual tools and evaluation of them by users. Section 3.4 draws conclusions on the success of the tools and the presentation of technical diagrams to blind people.

Chapter 5 combines the results of this chapter and the work on web accessibility described in Chapter 4 and draws conclusions on the re-presentation of visual content to blind people.

3.1. The TeDUB Project: Background

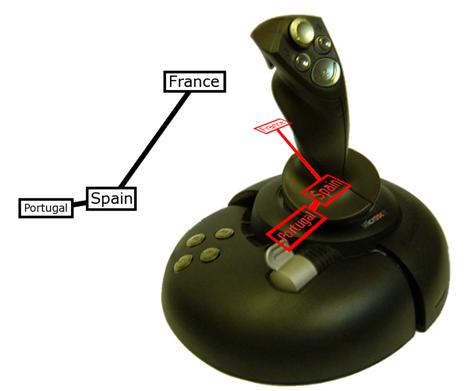

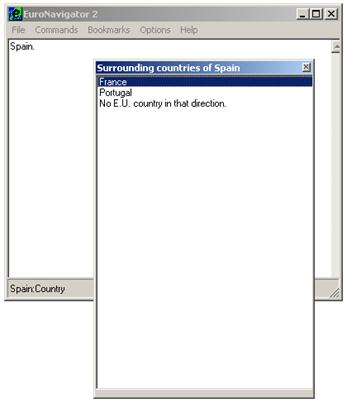

The Technical Drawings Understanding for the Blind project (TeDUB Project, 2004) was intended to allow blind people to read technical diagrams (Petrie et al., 2002). Technical diagrams can be obtained from many sources, for example scanned from print or obtained as bitmap images from the Internet. The TeDUB project was intended to take diagrams in electronic bitmap formats, perform image analysis to extract the diagram content, and re-present this content to a blind user. In practice working with bitmap images proved difficult and a way to import files from software engineering tools was developed. This chapter describes the development by the author of a set of tools intended to perform the re-presentation [9]: the image analysis is not relevant except where it affects the information available to re-present.

All user evaluations were performed and analysed by user groups in Ireland, the Netherlands, Germany and Italy under the supervision of the Centre for HCI Design [10]. Their results and conclusions are presented to inform the conclusions drawn by the thesis.

The TeDUB project focussed on three technical diagram domains. Two, electronic circuit diagrams and UML diagrams, are clearly diagrammatic information sources. The third diagram domain examined, floor-plan diagrams, is a pictorial information source, so the visual content of floor-plans is part of their information content. According to the framework presented in Chapter 2 this suggests that floor-plans are best presented to blind users through a direct tactile re-presentation using a tactile diagram. However, an attempt was made to use the tools developed for electronic circuits and UML diagrams because of a perceived demand for access to floor-plan diagrams and because it was an opportunity to try to extend the diagram presentation techniques developed for the diagrammatic information sources to examine whether they were still effective.

3.2. Potential presentation techniques

Without sight, there are two practical interfaces for communicating information: the sense of touch (haptic) and hearing (audio). Some systems employing these approaches have been described in Chapter 2 with reference to different models for the alternative presentation of visual information to blind people. This section provides a more detailed examination of the engineering practicalities of non-visual interfaces and how they might be employed to present visual content to blind people. Few of the systems given as examples rely exclusively on audio or haptic output: most combine them to some degree (e.g. Roth et al., 2001). They generally work in conjunction, reinforcing the output with a multimodal approach.

Throughout the following discussion it must be remembered that the majority of the information content of the diagrams of interest is communicated through text. This information is generally communicated to the user by a screen reader, a program that captures text information presented on the screen and outputs it to a Braille display line or through speech synthesis. It is up to an application to ensure that the text information that it presents is accessible to these screen-reader programs. This involves, for example, presenting text in standard operating system controls such as buttons rather than using inaccessible but novel controls that bypass the normal operating system drawing systems and generate their own text. Technologies such as Microsoft Active Accessibility (Sinclair, 2000) have provided application developers with the opportunity to help screen readers by crafting their applications specifically to support screen-reader interpretation. In the descriptions of different haptic and audio interfaces that follow, it should be noted that the user will be using a Braille display or speech synthesis to read the text content of the diagram in conjunction with or at the same time as the haptic or audio interface. These other interface features employed must therefore coexist with the fundamental screen-reader use. For example, in an interface using sound care must be taken that the information being conveyed does not conflict with speech output of any text currently being presented by the screen reader. The same general problem, in a more limited way because of greater user control over timing and activity, applies to input with a keyboard coexisting with a device using touch such as a Braille input mechanism or a joystick.

Instead of relying on a user’s screen reader, an application can be self-voicing: self-voicing applications are those that speak text aloud, without relying on a screen reader to capture text and present it to the user. This provides more flexibility for the developer, who has more control over speech output and can therefore employ different strategies such as spatialising speech around the user (employed for example in Crispien et al. 1994). However, there are significant disadvantages: the user must disable their own screen reader, or at least manage its conflicts with the application’s output [11]. This means that the user is cut off from their familiar screen reader, losing the ability to employ their established skills and strategies. The design of the diagram access tools for TeDUB therefore assumed the use of a screen reader and rejected the self-voicing approach.

The primary interface for the user with the tool will therefore be speech, augmented with other interfaces as necessary to communicate the spatial information in the diagrams. In text and speech communicating spatial information can only be done through description (e.g. “Left of this…”) which at best cumbersome and at worst useless when diagrams move beyond very trivial levels of complexity. The alternative is to employ an interface that itself has a spatial element. Excluding visual interfaces, this leaves haptic and audio presentation.

3.2.1. Haptic, tactile and kinaesthetic presentation

Devices that employ the sense of touch to communicate are often described as haptic. However, haptic has a more precise meaning, a combination of the kinaesthetic and tactile senses (Sekuler and Blake, 1994). The kinaesthetic sense is a sense pertaining to the position or movement of the body, the ability to know location and relative position. It is therefore explicitly spatial. A computer mouse uses this sense. The tactile sense pertains to what is felt by the skin and especially the hand, including fine shape and texture and hardness. Braille uses the tactile sense to allow readers to feel letters with their fingertips. Because both senses work so closely together they need not generally be regarded as individual senses, and so the term haptic properly describes their combined effect. While the literature commonly refers to any device that uses the sense of touch in some way, tactile or kinaesthetic, as haptic, this thesis will use this stricter definition.

Srinivasan and Basgodan (1997) describe the human sense of touch and its relationship with haptics. They also provide a further useful category, that of point-based haptic interaction. This is where the haptic device is limited to a single point in space, such as the end of a powered lever (e.g. a joystick). A movement in real space is mapped to an action in the computer system, for example moving a pointer or computer avatar. They are based on the perception of resistance to movement or forced movement of this single point. While surfaces of different textures and hardness can be modelled with accuracy, the limitation of the haptic interface to a single point makes it very difficult for users to use their hands and touch as they would with a real three-dimensional object. They would not benefit as much as they would from access to the real item that the haptic interface is representing. It is very difficult for users to discern tactile characteristics, including shape, since it does not support normal haptic exploratory procedures that people use when presented with a new object, described in Klatzky et al. (1987). These focus on texture and hardness, such as “this is smooth” or “this is soft”, and exploration of the object with the hands, discovering shape and dimensions with the fingers and palms acting in conjunction. Using a point-based haptic device is like trying to examine an object by holding one end of a pencil and moving the other over the object.

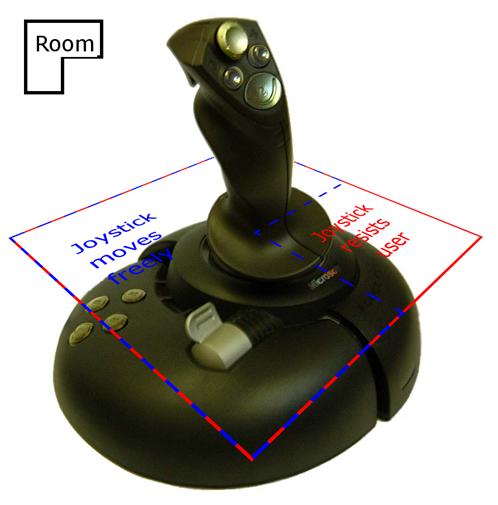

There exist specialised haptic devices with more capabilities, which are accurate and support three dimensions of movement. They are also all point-based. The leading high-resolution haptic device is the ‘PHANToM’ (Sensable Technologies, 2004), guidelines for use of which are provided in Sjöström (2001). This allows force-feedback output to the user and operates in three (rather than two) dimensions. Use of such a joystick might afford opportunities for creating esoteric user interfaces exploiting these additional abilities, but the guidelines note that even with high-powered devices like the PHANToM communicating spatial information is difficult. Sjöström notes that finding items in haptic space is very hard, external corners disrupt the development of a mental model of the shape, and reference points are vital for successful use: these are all consequences of using a point-based haptic device. Other haptic devices for blind people have been based on PHANToM devices, such as the TACTICS system described in Fritz et al. (1996) that used a PHANToM to present a tactile representation of mathematical diagrams. Schneider and Strothotte (2000) described a map navigation system using a combination of a PHANToM joystick and bricks that can be laid to indicate routes, but they provide no user evaluation of the results. Ramloll et al. (2000) described an attempt to communicate line graphs to blind people with a PHANToM joystick and an audio soundscape. A pilot study with three blind users found it difficult to track the lines because the lines were raised: lines depicted as grooves were more successful since the user could follow them with the joystick. Both the Schneider and Strothotte and the Ramloll et al. systems used the joystick to communicate the entire information source as though it were a real scaled tactile object in front of the user. However, these studies limited themselves to trying to describe very simple lines, rather than three-dimensional shapes. The haptic limitations of a point-based device are considerable, since they do not permit normal human haptic behaviour with the full hand. The failure of any real product or application for the joysticks outside of development implies that they are not suitable for communicating haptic information. They can do direction and even position but representing three- or two-dimensional shapes is too difficult.

Recent years have seen the appearance of cheap games joysticks with force-feedback functions that meet the requirements for basic haptic function described in Mark et al. (1995), with a high response rate maintained in hardware rather than the main control loop of the application. These types of devices are restricted to two-dimensional movement, so representations of data utilising a three-dimension approach will be impossible with the devices alone (for example, the room metaphor described in Savidis and Stephanidis (1995)). They combine a kinaesthetic sense (where the joystick is) with a tactile one (modelling a surface) so are 2D haptic devices. These are more limited than devices like the PHANToM but considerably cheaper. They share the limitation of being point-based and therefore difficult to use to model spatial information. Johansson and Linde (1998, 1999) tested one such joystick for use with blind people, modelling a 3D maze of walls through which users had to navigate, and found it to be effective as a two-dimensional tactile device. The limitations of these games joysticks arise from their small capacity for exerting force, which means they are unable accurately to model edges and surfaces. They are also less able to represent absolute position in a plane via kinaesthetics, since their relatively weak and inaccurate servomotors cannot accurately position the joystick against the exploratory movement of the user’s hand with the level of force and resolution required. However, they can represent relative positions (e.g. “Up and left”) with more success since this requires less of the device. If direction and position alone can be represented, and that with some degree of inaccuracy, then games joysticks can compete. The ‘DAHNI’ system (Morley et al., 1998a) used just such a games joystick and while it was not particularly popular with users it was acceptable. The ‘TGuide’ system described by Kurze (1998) required a specially-built point-based haptic device that moved in two dimensions in much the same fashion as a games joystick, but with more accuracy: however, a general solution must use a commercially-available solution. Games joysticks are suitable for examination in the TeDUB tools.

Tactile force-feedback mice have also been used to create haptic interfaces (mice without force-feedback are again kinaesthetic rather than haptic devices). These are also cheaper that specialised haptic devices. Gardner and Bulatov (2001) created a system that allowed vector graphic SVG diagrams (Ferraiolo et al., 2004) composed of lines and points to be navigated hierarchically using a force-feedback mouse. However, there is no commercially-available force-feedback mouse available on the market. The force-feedback mouse is generally of the same class as a force-feedback joystick.

A standard mouse is an effective input device for sighted people, but it is useless for blind people since it is a relative positioning device. It is used in conjunction with a pointer indicating the point of action on the screen, but that pointer is unavailable to blind people. Even if it were made available through an audio representation, the amount of positional information that needs to be communicated is prohibitive. While some researchers have attempted to communicate mouse-driven systems to blind users (for example Weber et al. 1996) and some support for the mouse pointer is built into most screen readers, it is reasonable to note that mice are not used by blind people in general and conclude that the obvious problems of knowing where one is pointing, where one can go to point at something else, and communicating what is under the pointer all combine to make standard mouse use impractical for blind users. It cannot even be used to indicate direction. A standard mouse is therefore useless for a TeDUB tool.

Attempts have been made to develop tactile panels consisting of many hundreds of pins and able to present a whole tactile surface dynamically such as Wellman et al. (1998). This poses considerable technical challenges (to make the interface work) and will pose considerable production challenges (making the resulting device affordable). Even if both of these problems are resolved it seems likely that these will have some of the problems of tactile diagrams produced without editing or careful production, in that the resulting surface will be too complicated to understand (e.g. reading areas of text, deciphering what elements are). This precludes automated transcription. Rotard and Ertl (2004) report some success in transforming and presenting bar diagrams.

One approach places a small panel of pins on top of a mouse which tracks its absolute position on the screen, so the device can indicate what lies under the mouse pointer (SeebyTouch, 2004; VirTouch, 2004). These have two problems: first, much like the point limitation of many haptic devices, only a tiny area is available for examination with the fingertips only. The user cannot use both hands to move over the diagram. Second, it is unlikely that users to be able to make sense of diagrams without extensive preparation (e.g. removing text, simplifying the image), and this precludes automated re-presentation. Wyatt et al. (2000) created a device using two refreshable Braille cells connected to a standard mouse. This still required preparation of the diagrams in electronic format to power the mouse output.

Finally, there are many other experimental and development tactile interfaces such as tactile gloves. MacKenzie (1995) provides details. However, these are all very much research projects, not contenders for use in the TeDUB project.

All of these systems require training and practice to be effective. This is demonstrated in Vitense et al. (2002), where sighted people were tested for their use of multimodal interfaces. A force-feedback mouse, sound and vision made a total of three modalities (haptic, audio and visual). Sighted people were tested using one, two or three of these modalities. Results indicated that unimodal feedback gave good performance and had a low perception of workload. Bimodal haptic and visual feedback came out best for the limited task tested. Trimodal feedback performed poorly in performance and perception of workload. Interestingly, however, an objective physiological workload score – pupil dilation – indicated that this trimodal system had the lowest workload, contradicting the users’ expressed beliefs about their performance. This suggests that greater training with trimodal systems may lead them to be of benefit. Conversely, it suggests that the haptic interfaces presented to users should be as simple as possible since they will be difficult for users to pick up and use effectively within the limited time periods available for evaluation.

3.2.2. Audio: directional and non-speech sounds

The second potential way to present spatial, positional and other visual information is audio. (The special role of speech has already been described, and since speech was left entirely to the user’s screen reader the following discussion does not apply to any speech output from the TeDUB tools). The factors involved in audio presentation are spatial – where a person perceives a sound as originating – and content – of what the sound consists.

Hearing is spatial. The general psycho-acoustical properties of hearing are described in Blauert (1983). Generally, humans are best at realising positional and informational information in the left-right axis, largely because of the position of human ears at each side of the head. Forward-back and up-down identification is more difficult. In addition, pitch (frequency) of sound is frequently perceived as height, so high-pitched noises are perceived as coming from a higher position. This is advantageous in attempts to overcome the problems in trying to communicate height – a system might use pitch instead – but disadvantageous if the system attempts to use pitch independently of height.

The ability of modern inexpensive sound cards to support 2D and 3D sound is demonstrated in the systems produced by Drullman and Bronkhorst (1999), which spatialised speech from different sources around the user simultaneously using standard computer components. For the purposes of this thesis, it is sufficient to note that cheap standard hardware can now support the location of multiple sounds from anywhere around the user. Any limitation is in the hardware used to generate these sounds: if the user has a surround-sound speaker system, featuring at least four speakers, then sound can be located externally all around the user. If the user is using headphones, which is far more likely in, say, an office environment, or two speakers on the desktop, they will generally only be able to discern sounds from along the left-right axis. Using headphones, they will locate sounds between the ears: using two speakers, sounds will be to left to right across the user’s front (Hawley et al. (1999)). Lorho et al. (2001) demonstrate that headphones are adequate for absolute sound localisation if the number of points is restricted to five, and three are easily discerned by almost all users.